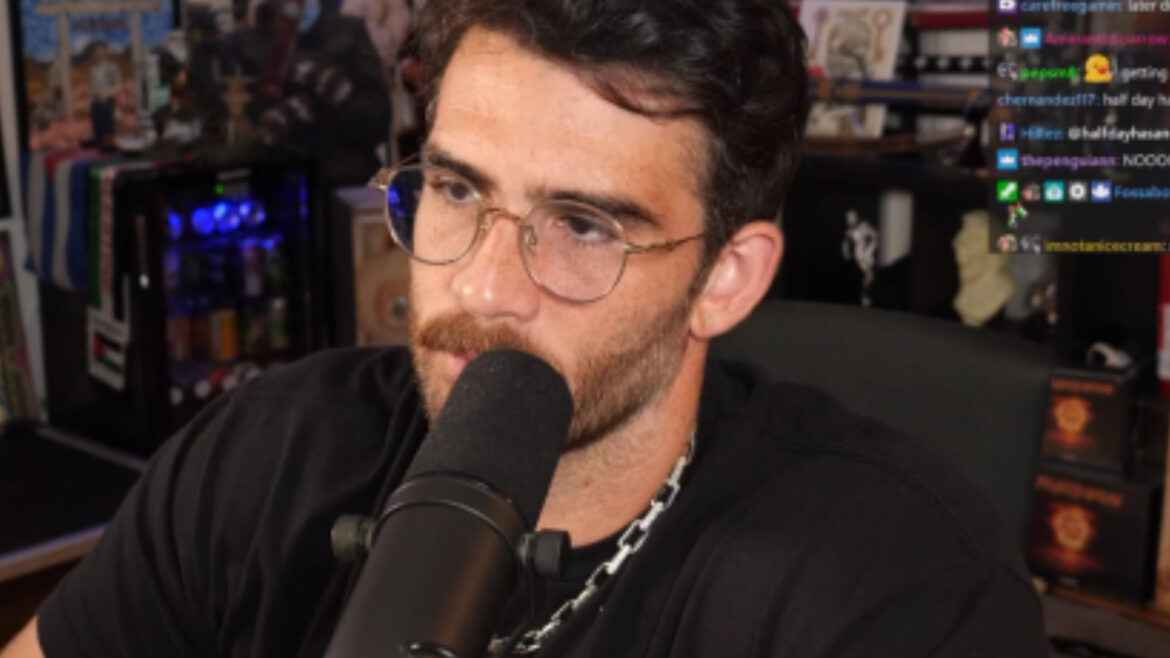

Twitch streamer Hasan is the latest content creator saying he won’t attend TwitchCon, stating that his presence could put others at risk.

TwitchCon is set for October 17-19 in San Diego, California. The annual event gives streamers and viewers a chance to interact in-person, but this year, fans will be without some of the biggest stars.

Notably, Valkyrae and QTCinderella said they were “afraid” to attend the event and will be staying home due to safety issues. QT in particular cited a situation where a creator was stabbed to death in Japan.

Article continues after ad

While Twitch CEO Dan Clancy dismissed the concerns, stating that they take security “extremely seriously” and prevent users who are banned on the platform from attending, it has done little to change opinions.

On September 29, political streamer Hasan revealed he too, will be ditching TwitchCon 2025, seemingly referencing the assassination of Charlie Kirk as the catalyst for his decision.

Article continues after ad

Hasan backs out of TwitchCon 2025

In response to a fan who said they miss the “social Hasan era,” the streamer explained that he believes his appearance could put the whole event in danger.

Article continues after ad

“There is, unfortunately, an obvious setback there. One, all the political incidents that have taken place. And two, how those political incidents have had pretty significant consequences in how much I can go out in the real world and socialize with other people,” he said.

On September 10, right-wing YouTuber and political activist Charlie Kirk was assassinated while speaking at Utah Valley University.

According to Hasan, that means he won’t be going to TwitchCon, but claims it’s not because he’s afraid of his own safety.

Article continues after ad

“I’m literally not going to TwitchCon because I’m afraid of the safety of others. I don’t want to put them in the f**king crosshairs if some psycho freak decides, ‘I’m gonna go there.’ I’m very publicly not going to TwitchCon for that reason.”

Article continues after ad

Hasan has had a controversial history on Twitch. In 2024, he showed a video about the Houthi Movement to fellow Twitch streamer Nmp, which sparked considerable backlash from viewers. The group has been designated as a Terrorist Organization by the US State Department.

Article continues after ad

The site’s CEO, Dan Clancy, also faced calls by Democratic Rep. Ritchie Torres to “stop popularizing those who popularize antisemitism,” singling out Hasan’s content, specifically.

Meanwhile, fellow Twitch streamer Asmongold has urged women not to attend TwitchCon and took aim at the platform’s claims that it takes security seriously.

“Last year, nmplol and wake were sexually assaulted by a streamer and Twitch didn’t press charges or pursue any form of legal action against him,” he blasted. “If I was a woman, I would never go to TwitchCon.”

Article continues after ad