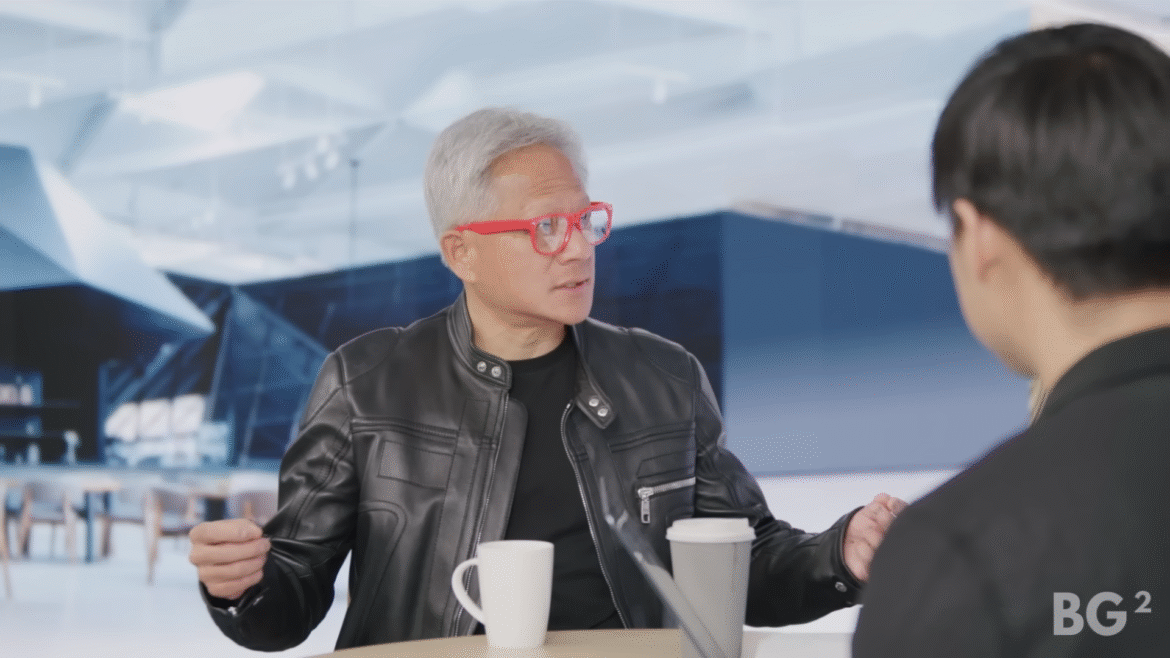

The explosion of generative AI over the last few years signals a change in the job market alongside it, and this brings with it worries of job instability and losses. With big players like Nvidia, Meta, X, and the governments worldwide further committing to AI, questions are posed to those at the very top.

In a recent interview with the British news channel, Channel 4, Nvidia’s CEO Jensun Huang, gave his thoughts on what’s next. He says, “If you’re an electrician, if you’re a plumber, if you’re a carpenter, we’re going to need hundreds of thousands of them. To build all of these factories.”

Huang argues, “The skilled craft segment of every economy is going to boom”.

Related articles

“You’re going to have to build. You’re going to keep doubling and doubling… every single year.”

Just last week, Nvidia shared plans to spend $100 billion on OpenAI. This cash is intended to go towards greater supplies of data centre chips, to further train upcoming AI models. Just weeks before that, Nvidia’s earnings report showed it made almost 10 times more from AI than gaming. Nvidia’s stock price is also at an all-time high, over 10 times what it was half a decade ago.

@c4news

CEO of US chip giant Nvidia, Jensen Huang, tells Channel 4 News, that ‘electricians and plumbers’ will be the big winners in the AI race as the skilled craft segment of every economy is going to see a ‘boom’. #Tech #AI #Nvidia #Economy #C4News

♬ original sound – Channel 4 News

OpenAI’s spending has also skyrocketed, alongside ChatGPT’s popularity, and the US government is firmly behind cementing “US dominance in artificial intelligence”. From a PC gaming perspective, we’ve even seen brands like Razer jump on the AI bandwagon. This is all to say AI doesn’t appear to be going away, and it has backing in the hundreds of billions.

(Image credit: Nvidia)

When asked what could happen if the UK doesn’t ‘grasp this opportunity’, Huang says, “just as the last industrial revolution, the reason why it came about was because you needed it. And so the industrial revolution that started here in the UK came out of need. You need it now, too.”

Earlier this year, the UK’s Department for Science, Innovation and Technology signed an agreement with OpenAI to push the chatbot into the public sector in a further bid to make the UK an AI powerhouse.

Though highlighting future employment seems relevant to current fears around the job market, this doesn’t address those who have degrees and experience in fields being replaced. Senator Bernie Sanders argued in June that “Artificial intelligence is going to displace millions and millions of workers”. A month after this, OpenAI’s Sam Altman shared that he thinks some jobs will be “totally, totally gone” due to AI. A former Google executive in August argued AI will lead to a “short-term dystopia” because it will struggle to create new jobs for those it is replacing.

Huang tells reporters, “You’re going to be building out AI infrastructure here in the UK for a decade,” but it’s not clear what the plan is for workers after that.

Best gaming rigs 2025

All our favorite gear

Source link