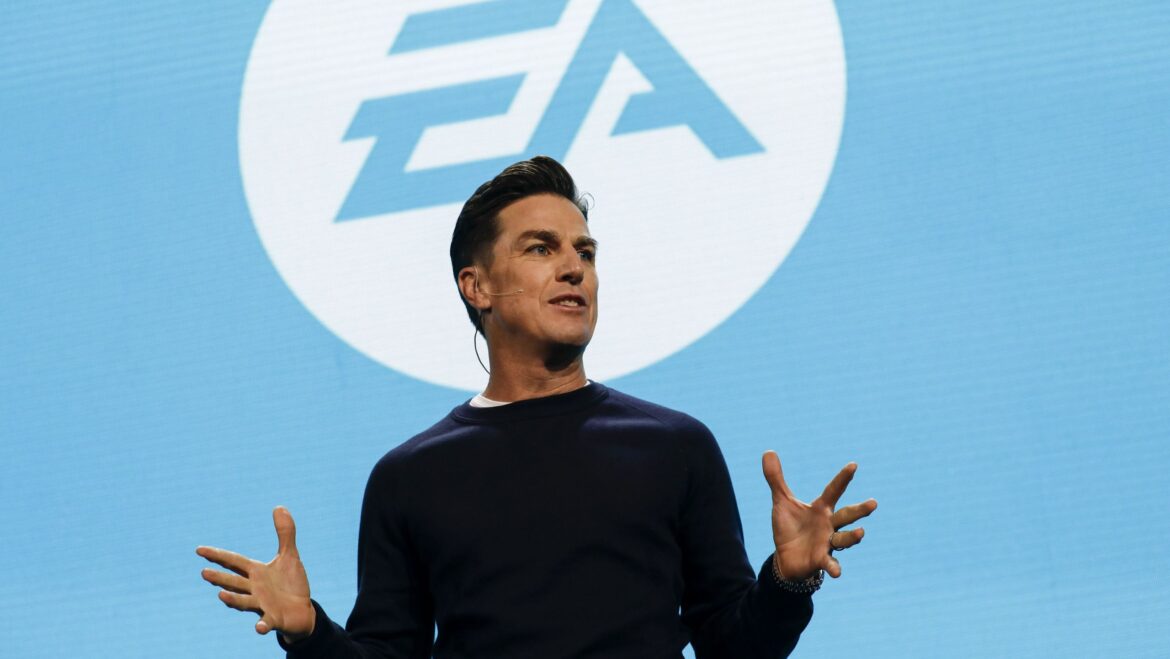

The leveraged buyout of EA, which will see private equity firms and Saudi Arabia’s Public Investment Fund take control of the megapublisher for $55 billion (and saddle it with $20 billion in debt), has us all wondering what the new owners are going to do with it.

As games industry analyst Mat Piscatella said this week, no one really knows, but the speculation we’re hearing from analysts and corporate finance experts is that EA’s new owners aren’t likely to shake things up in the immediate future, and will probably do what you’d expect: focus on its existing live service moneymakers as it pays off that $20 billion in debt.

Philip Alberstat, managing director at DBD Investment Bank, doesn’t foresee a Toys ‘R’ Us-style descent into bankruptcy as a result of the new debt on EA’s balance sheet.

Related articles

“EA generates approximately $7.5 billion annually from franchises like Apex Legends, Battlefield, and FIFA [now EA Sports FC],” said Alberstat. “That flow of cash gives EA a real capacity to service the $20 billion in debt. The Toys ‘R’ Us comparison gets thrown around, but in reality that was a dying retailer. EA has sustainable revenue from live services across multiple platforms.”

Beyond its sports games, being freed from the scrutiny of public investors could “in theory give EA breathing space to push innovation in new IP and titles,” says Phylicia Koh, general partner at investment firm Play Ventures, but Newzoo director of market intelligence Emmanuel Rosier—a former EA strategist himself—also notes that “consolidation often brings more cautious portfolio management.”

“Publishers may double down on proven franchises rather than taking risks on experimental projects, which could narrow the creative pipeline over time,” wrote Rosier in a recent newsletter about the buyout.

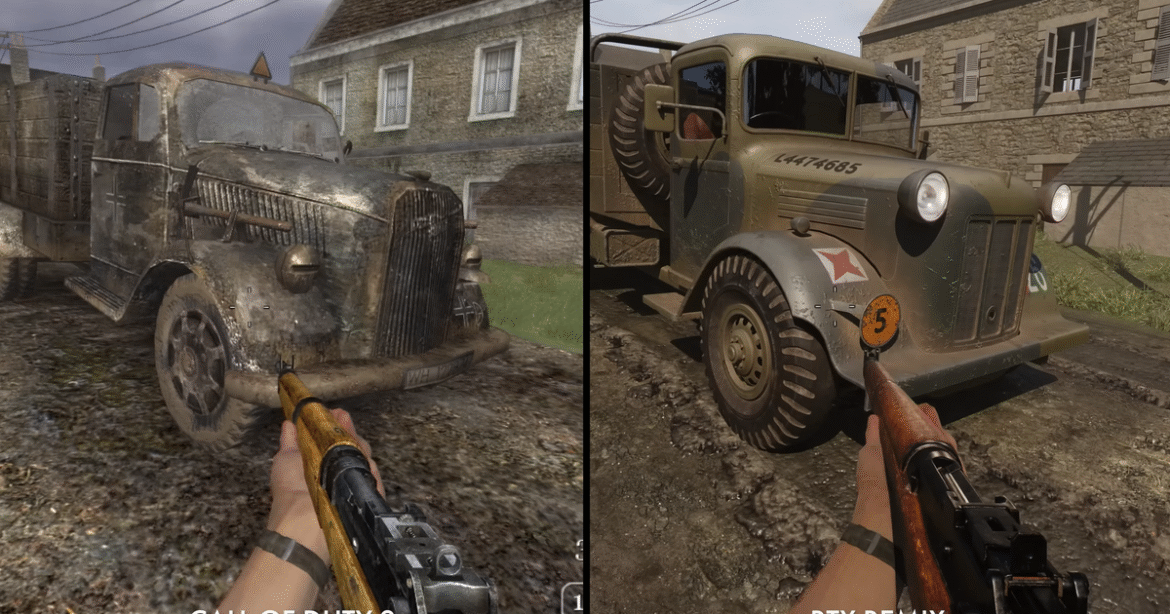

EA’s biggest moneymakers are unsurprisingly its sports games, according to Newzoo. (Image credit: Newzoo)

Consolidation, and the resulting layoffs and studio closures, has been the theme of the 2020s games industry, with Microsoft, Tencent, Embracer and others snapping up studios left and right. Rosier says that “opportunities may grow for AA studios and indie developers to stand out” as a result of that trend. That’s the thinking of Alberstat, as well, who says that gaming is “moving into a new phase where the biggest players need serious capital to compete,” and are even more risk averse as a result.

“We’ll see more consolidation at the top, but also more room for focused studios doing what large publishers can’t: taking chances on new ideas,” said Alberstat on gaming’s future. “The industry isn’t dying, it’s splitting into two different models. You have capital-intensive blockbusters on one side and creative independent development on the other. Both can thrive. The question is whether the consolidation leaves enough buyers in the market when those independent studios are ready to exit.”

On the topic of what large publishers will and won’t take a chance on, BioWare is in a precarious position. EA already tried to get the struggling RPG studio to make a live service hit with Anthem and it didn’t work, and it’s hard to imagine the politically progressive Mass Effect and Dragon Age creator thriving under the ownership of Jared Kushner and Saudi Arabia. Its staff is worried.

Judging by Saudi Arabia’s acquisition of mobile developer Scopely, EA may be allowed to operate independently “in the short-medium term,” Koh said, adding however that the publisher has a challenge ahead as it balances the wants of its three primary owners: “I imagine PIF will want some job creation for the Saudi market.”

For Rosier, “the future of Battlefield, The Sims, Apex Legends, Mass Effect, and Dragon Age is less clear” than the future of the sports games at the top of the pile. “These IPs could be streamlined, spun out, or restructured through partnerships, depending on how the new owners assess profitability and growth potential, as well as the post-closing portfolio decisions,” he said.

Best PC build 2025

All our favorite gear