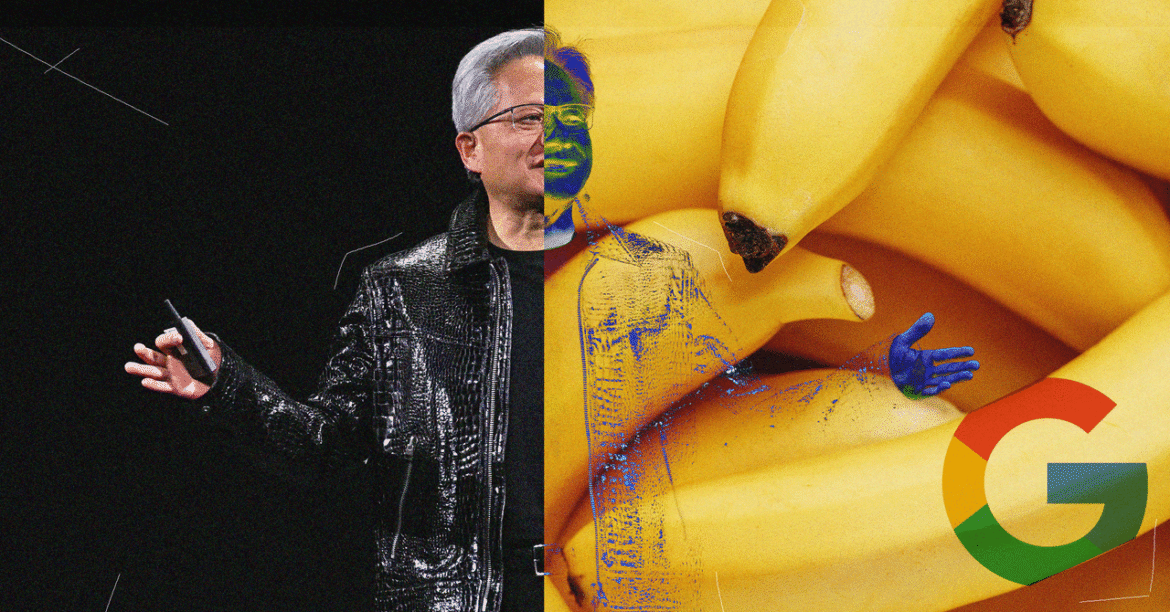

Nvidia CEO Jensen Huang is in London, standing in front of a room full of journalists, outing himself as a huge fan of Gemini’s Nano Banana. “How could anyone not love Nano Banana? I mean Nano Banana, how good is that? Tell me it’s not true!” He addresses the room. No one responds. “Tell me it’s not true! It’s so good. I was just talking to Demis [Hassabis, CEO of DeepMind] yesterday and I said ‘How about that Nano Banana! How good is that?’”

It looks like lots of people agree with him: The popularity of the Nano Banana AI image generator—which launched in August and allows users to make precise edits to AI images while preserving the quality of faces, animals, or other objects in the background—has caused a 300 million image surge for Gemini in the first few days in September already, according to a post on X by Josh Woodward, VP of Google Labs and Google Gemini.

Huang, whose company was among a cohort of big US technology companies to announce investments into data centers, supercomputers, and AI research in the UK on Tuesday, is on a high. Speaking ahead of a white-tie event with UK prime minister Keir Starmer (where he plans to wear custom black leather tails), he’s boisterously optimistic about the future of AI in the UK, saying the country is “too humble” about the country’s potential for AI advancements.

He cites the UK’s pedigree in themes as wide as the industrial revolution, steam trains, DeepMind (now owned by Google), and university researchers, as well as other tangential skills. “No one fries food better than you do,” he quips. “Your tea is good. You’re great. Come on!”

Nvidia announced a $683 million equity investment in datacenter builder Nscale this week, a move that—alongside investments from OpenAI and Microsoft—has propelled the company to the epicenter of this AI push in the UK. Huang estimates that Nscale will generate more than $68 billion in revenues over six years. “I’ll go on record to say I’m the best thing that’s ever happened to him,” he says, referring to Nscale CEO Josh Payne.

“As AI services get deployed—I’m sure that all of you use it. I use it every day and it’s improved my learning, my thinking. It’s helped me access information, access knowledge a lot more efficiently. It helps me write, helps me think, it helps me formulate ideas. So my experience with AI is likely going to be everybody’s experience. I have the benefit of using all the AI—how good is that?”

The leather-jacket-wearing billionaire, who previously told WIRED that he uses AI agents in his personal life, has expanded on how he uses AI (that’s not Nano Banana) for most daily things, including his public speeches and research.

“I really like using an AI word processor because it remembers me and knows what I’m going to talk about. I could describe the different circumstance that I’m in and yet it still knows that I’m Jensen, just in a different circumstance,” Huang explains. “In that way it could reshape what I’m doing and be helpful. It’s a thinking partner, it’s truly terrific, and it saves me a ton of time. Frankly, I think the quality of work is better.”

His favorite one to use “depends on what I’m doing,” he says. “For something more technical I will use Gemini. If I’m doing something where it’s a bit more artistic I prefer Grok. If it’s very fast information access I prefer Perplexity—it does a really good job of presenting research to me. And for near everyday use I enjoy using ChatGPT,” Huang says.

“When I am doing something serious I will give the same prompt to all of them, and then I ask them to, because it’s research oriented, critique each other’s work. Then I take the best one.”

In the end though, all topics lead back to Nano Banana. “AI should be democratized for everyone. There should be no person who is left behind, it’s not sensible to me that someone should be left behind on electricity or the internet of the next level of technology,” he says.

“AI is the single greatest opportunity for us to close the technology divide,” says Huang. “This technology is so easy to use—who doesn’t know how to use Nano?”