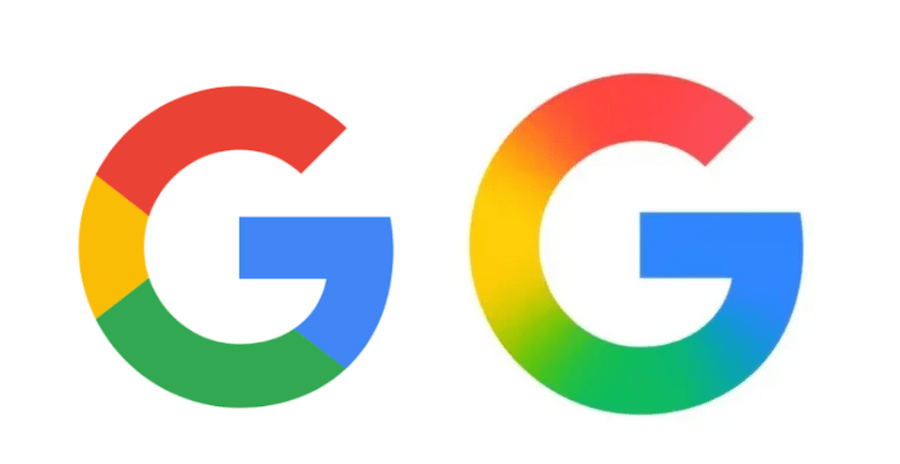

Google is making the gradient “G” its new company-wide logo, according to an announcement on Monday. The new logo first began to surface across the Google app on Android and iOS in May, but soon, the design will begin to appear across all of the company’s platforms, marking Google’s first big logo change in 10 years.

Google separated the red, yellow, green, and blue in the colorful “G” logo it introduced in 2015. The new logo blends everything together and makes the four colors brighter, bringing the design in line with its gradient Gemini logo. Google says the change reflects its “evolution in the AI era.”

Along with a new “G,” Google also quietly updated its Google Home logo to match its new look. Google says the design will start rolling out more widely in the “coming months,” which means you may soon start seeing the gradient look make its way across its other apps, too, like Gmail, Drive, Meet, and Calendar.