Following Apple’s removal of ICEBlock from the App Store, an app used to report on the activity of Immigration and Customs Enforcement agents, 404 Media reports that Google is also removing similar apps from the Play Store. In a statement to Engadget, Google said “ICEBlock was never available on Google Play, but we removed similar apps for violations of our policies.”

Google says that it decided to remove apps that shared the location of a vulnerable group following a violent act that involved the group and a similar collection of apps. It suggests the apps were also removed because they didn’t appropriately moderate user-generated content. To be offered in the Play Store, apps with user-generated content have to clearly define what is or isn’t objectionable content in their terms of service, and make sure those terms line up with Google’s definitions of inappropriate content for Google Play.

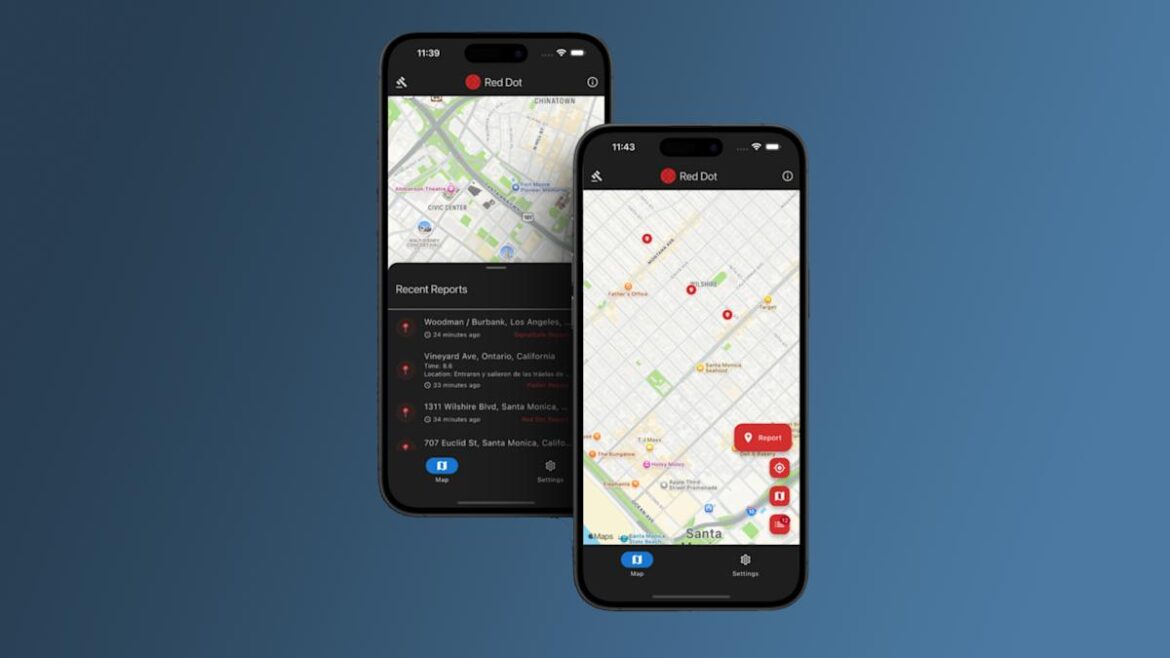

404 Media report specifically focuses on Red Dot, an app that both Google and Apple removed. Like ICEBlock, Red Dot designed to let users report on ICE activity in their neighborhood. Rather than just rely on user submissions, the app’s website says that it “aggregates verified reports from multiple trusted sources” and then combines those sources to determine where to mark activity on a map of your area. “Red Dot never tracks ICE agents, law enforcement, or any person’s movements” and the app’s developers “categorically reject harassment, interference, or harm toward ICE agents or anyone else.” Despite those claims, the app is not currently available to download from the Play Store or the App Store.

The pushback against ICE tracking apps seemed to begin in earnest following a shooting at a Dallas ICE facility that injured two detainees and killed another on September 24. According to an FBI agent that spoke to The New York Times, the shooter “had been following apps that track the location of ICE agents” in the days leading up to the event.

Apple pulled the ICEBlock app from the App Store yesterday following a request from US Attorney General Pam Bondi. In a statement shared with Fox Business, Bondi said that “ICEBlock is designed to put ICE agents at risk just for doing their jobs, and violence against law enforcement is an intolerable red line that cannot be crossed.” Apple’s response was to remove the app. “Based on information we’ve received from law enforcement about the safety risks associated with ICEBlock, we have removed it and similar apps from the App Store,” Apple told the publication.

Google says it didn’t receive a similar request to remove apps from the Play Store. Instead, the company appears to be acting proactively. The test for either platform going forward, though, is if there’s a way that developers can offer these apps without them being removed again.