Why you can trust TechRadar

We spend hours testing every product or service we review, so you can be sure you’re buying the best. Find out more about how we test.

TCL QM9K: Two-minute review

The TCL QM9K is the final TV series the company launched in 2025, and it was clearly saving the best for last. As the company’s flagship mini-LED TV series, it arrives packed with the highest level of performance, along with the latest and greatest features, with some of those exclusive to the QM9K.

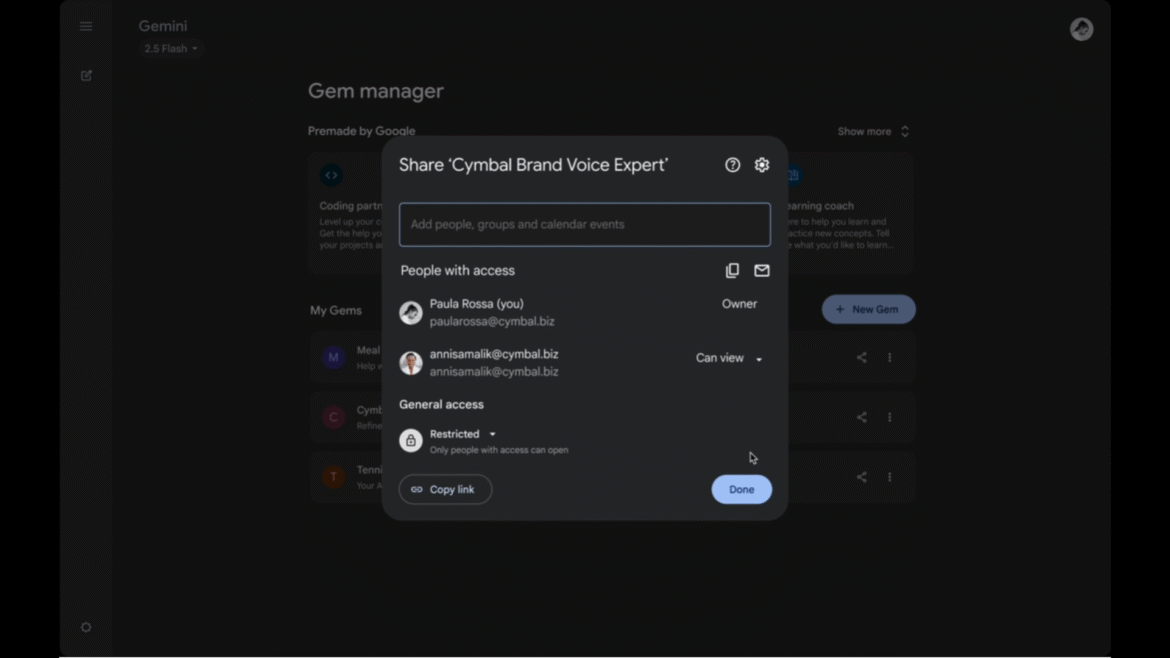

A key exclusive feature of the TCL QM9K at launch is Google TV with Gemini. This adds the Gemini AI chatbot to what is otherwise the same Google TV smart interface found on many of the best TVs. Gemini enables you to conduct not just content searches, but research on essentially any topic, from dinner recipes to ancient Roman civilization, using the TV’s built-in far-field mic.

The contextual, conversational Gemini lets you ask follow-up questions without losing the thread, and for those concerned about privacy, queries get deleted after 10 minutes. Other Gemini capabilities include image generation, and the screensavers you create can pop up on the screen automatically when you enter the room, thanks to the TV’s built-in presence sensor (another QM9K exclusive).

On the picture-quality front, the QM9K features a mini-LED panel with up to 6,500 local dimming zones, and TCL’s Halo Control System works to minimize incidences of backlight blooming – something it does remarkably well. The result is an exceptionally bright picture with powerful contrast, deep, uniform blacks, and detailed shadows.

Other features that enhance the QM9K’s picture are an AIPQ Pro processor, a wide viewing angle feature, and an anti-reflective screen. Dolby Vision IQ and HDR10+ high dynamic range formats are both supported, and the TV features Filmmaker Mode and is IMAX Enhanced.

The QM9K’s Audio by Bang & Olufsen speaker system provides potent Dolby Atmos sound with clear dialogue and an impressive level of bass for a TV. And if you want even better sound, the QM9K is one of the first TVs to support Dolby FlexConnect, which can output wireless Atmos sound to the company’s Z100 speakers and Z100-SW subwoofer, as well as automatically configure the system for optimal performance.

TCL’s Zero Bezel design for the QM9K reduces the screen’s bezel width to 3.2mm for a striking, “all-picture” look. An adjustable height pedestal stand provides solid support and can clear space for a soundbar, and the TV’s edges taper in at the sides to give it a slim profile.

A respectable number of gaming features are provided on the QM9K, including two HDMI 2.1 ports with 4K 144Hz support, FreeSync Premium Pro, ALLM, and Dolby Vision gaming. A pop-up Game Bar menu lets you make quick adjustments, and a Game Accelerator 288 feature enables 288Hz gaming at 1080p resolution.

- TCL QM9K QD-Mini LED 4K TV (2025) (55-inch LED) at Amazon for $497.99

TCL QM9K review: Price and release date

The QM9K’s high brightness and refined local dimming help to bring out fine details in images (Image credit: Future)

- Release date: September 2025

- 65-inch: $2,999.99

- 75-inch: $3,499.99

- 85-inch: $3,999.99

- 98-inch: $5,999.99

The TCL QM9K is available in 65- to 98-inch screen sizes. As TCL’s flagship TV, it is priced higher than other series in the company’s mini-LED TV lineup, with the 75-inch model I tested priced at $3,499 at launch. To put the QM9K’s price in perspective, the step-down TCL QM8K mini-LED launched in May at $3,199 for the 75-inch size, and is now selling for around $2,000.

Similar to the QM8K, prices for the QM9K are already starting to drop, with the 75-inch model having been reduced to $2,499. Other flagship mini-LEDs in a 75-inch size that the QM9K is competing with include the Samsung QN90F (currently selling for $2,099) and Hisense U8QG (currently selling for $1,899).

TCL QM9K review: Specs

Swipe to scroll horizontally

Screen type:

QLED with mini-LED

Refresh rate:

144Hz

HDR support:

Dolby Vision, HDR10+, HDR10, HLG

Audio support:

Dolby Atmos

Smart TV:

Google TV

HDMI ports:

4 (2x HDMI 2.1)

Built-in tuner:

ATSC 3.0

TCL QM9K review: Benchmark results

TCL QM9K review: Features

The QM9K has two HDMI 2.1 ports with 4K 144Hz support, plus two HDMI 2.0 ports (Image credit: Future)

- Wide viewing angle and anti-reflective screen

- Audio by Bang & Olufsen

- Google TV with Gemini

The TCL QM9K features a high-contrast display panel with a wide viewing angle and an anti-reflective screen. A Halo Control System with a 23-bit controller and dynamic light algorithm is used to minimize blooming from the TV’s mini-LED backlight, which provides up to 6,500 local dimming zones, and the company’s AIPQ Pro processor is used for upscaling and noise reduction.

High dynamic range support includes both the Dolby Vision IQ and HDR10+ formats. The QM9K is also IMAX Enhanced certified and features a Filmmaker Mode picture preset, a new addition to TCL TVs in 2025.

The QM9K’s Audio by Bang & Olufsen built-in speaker system features Dolby Atmos support, and there’s pass-through for DTS:X. Upfiring speakers for Atmos height effects are located on the TV’s top surface, and there are dual subwoofers on the back panel.

TCL’s Zero Border design for the QM9K provides a disappearingly thin bezel that allows for virtually the full screen area to be filled with the picture. A built-in sensor can be configured to turn the TV on when it detects your nearby presence, and it will then be switched to ambient mode, displaying artwork or photos. A built-in far-field mic lets you use voice commands to control the TV, and there’s also a built-in mic on the backlit remote control that lets you do the same.

The QM9K is the first Google TV with Gemini AI, which lets you carry out contextual, voice-based content search, among other things. Gemini can be used to control Google Assistant smart home devices, and the TV additionally works with Alexa and Apple HomeKit and jas AirPlay and Chromecast built-in support.

Gaming features include 4K 144Hz support on two of the TV’s four HDMI ports, FreeSync Pro, and Game Accelerator 288, which lets you game in 1080p resolution at a 288Hz refresh rate. There is also a Game Bar onscreen display that lets you make gaming-related adjustments without having to exit to a menu.

TCL QM9K review: Picture quality

The QM9K’s high brightness and anti-glare screen make it a great option for bright room viewing (Image credit: Future)

- Exceptional brightness and contrast

- Rich color and excellent detail

- Wide viewing angle

When I previously reviewed the TCL QM9K’s step-down sibling, the TCL QM8K, one of that TV’s high points was its exceptional brightness. In my testing, the QM9K proved to be even brighter than the QM8K, with a measured peak HDR brightness of 3,322 nits in Filmmaker Mode and 3,811 nits in Standard mode. Fullscreen HDR brightness was also impressive, with the QM9K hitting around 660 nits in both Filmmaker and Standard modes.

The QM9K’s HDR color gamut coverage was also very good, with the TV measuring 96.7% for UHDA-P3 and 79.7 for BT-2020. Color point accuracy was similarly good, with the Delta-E value (the margin of error between the test pattern source and what’s shown on-screen, with a result lower than 3 being undetectable by the human eye) averaging out to 1.8, and to 2.8 for grayscale accuracy.

The QM9K’s high brightness, combined with its extended, accurate color and refined local dimming translated into an exceptional picture. Watching the animated Spider-Man: Into the Spider-Verse on 4K Blu-ray, a scene where Spider-Man battles the Green Goblin had powerful contrast, and it caused the scene’s psychedelic color palette to pop more than I’m used to seeing on most TVs. The film’s animated textures were also displayed with a high level of detail, giving it a near 3D-like effect.

The QM9K’s impressive detail carried over to Ripley, which I streamed in 4K with Dolby Vision from Netflix. Watched in the TV’s Dolby Vision Dark picture mode, textures in clothing and objects were clearly visible, and it gave the picture a strong sense of depth. The show’s black and white images looked completely uniform, and subtle gray tones were easily revealed.

Test patterns on the Spears & Munsil Ultra HD Benchmark 4K Blu-ray confirmed that the QM9K’s CrystGlow WHVA Panel worked as well in maintaining uniform color and contrast over a wide viewing angle as the TCL QM8K did when I tested it. The disc’s local dimming torture tests also confirmed the effectiveness of TCL’s Halo Control System at minimizing backlight blooming in high contrast images.

With its Ultra Wide Angle feature, the QM9K’s picture looks uniformly good over a range of seating positions (Image credit: Future)

The only area where the QM9K stumbled a bit was in its motion handling. Watching a scene from the James Bond film No Time to Die, where Bond walks across a craggy hillside cemetery, there was a fair amount of judder, although this could be eliminated by making adjustments to the Custom mode in the TV’s Motion menu without introducing too much “soap opera” effect.

There was also a very slight degree of vignetting at the edges of the screen, an effect likely related to its Zero Border design. This was mostly visible on test patterns, however, and I rarely spotted it on TV shows or movies.

The QM9K’s anti-reflection screen proved effective at reducing screen glare from overhead lights and maintaining picture contrast. I did see some reflections from lamps when placed in the path of the screen, but it was minimal and mostly an issue for dark images.

- Picture quality score: 4.5/5

TCL QM9K review: Sound quality

The QM9K’s Audio by Bang & Olufsen speaker system features upfiring drivers for Dolby Atmos height effects and dual subwoofers on the TV’s rear (shown) (Image credit: Future)

- Audio by Bang & Olufsen with Beosonic interface

- Dolby FlexConnect support

- Potent sound with good directional effects

TCL doesn’t provide much in the way of audio specs for its TVs, but the QM9K does have an Audio by Bang & Olufsen Dolby Atmos speaker system with upfiring drivers and built-in subwoofers. There’s no DTS Virtual:X processing, but DTS:X pass-through is supported.

The QM9K is also compatible with Dolby FlexConnect, which lets you use the TV with the company’s Z100 wireless FlexConnect speakers and Z100-SW wireless subwoofer. FlexConnect automatically configures and calibrates the system from the TV, and it gives you the freedom to position the speakers anywhere you want in the room, not just in the standard home theater positions to the side of the screen, or behind you to the sides.

One notable feature related to the Audio by Bang & Olufsen on the QM9K is its Beosonic interface, which becomes available when you select the TV’s Custom sound preset.

The graphic interface features a cursor that allows you to move between Relaxed, Energetic, Bright, and Warm quadrants to adjust the sound to your liking. Using this, I was able to warm up the TV’s too-bright sound, though I ended up leaving the Movie preset in place for most of my testing.

Overall, I found the QM9K’s sound to be pretty potent, with clear dialogue and a good helping of bass. When I watched the chase scene through the town square in No Time to Die, the crashes and gunfire had good impact and directionality, and the sound of ringing church bells in the Dolby Atmos soundtrack had a notably strong height effect.

I imagine many viewers will be fine with using the QM9K’s built-in speakers, though I’d recommend adding one of the best soundbars or taking advantage of its FlexConnect feature to get sound quality that equals the picture.

- Sound quality score: 4.5/5

TCL QM9K review: Design

The TV’s pedestal stand can be installed flush or at an elevated height (shown) to accommodate a soundbar (Image credit: Future)

- ZeroBorder design

- Height-adjustable pedestal stand

- Full-size, backlit remote control

TCL’s ZeroBorder design minimizes the QM9K’s bezel to a vanishingly small 3.2mm, giving it a true “all-picture” look. The panel itself has a two-inch depth, although its sides taper inward in a manner that gives the TV a slimmer look when viewed from the side.

A pedestal stand comes with the QM9K in screen sizes up to 85 inches, while the 98-inch version features support feet. The stand is made of metal covered with a faux brushed metal plastic, and it has two height positions, with the higher option clearing space for a soundbar.

Connections on the QM9K include four HDMI ports (one with eARC), two USB type-A, Ethernet, and an optical digital audio output. There is also an ATSC 3.0 tuner input for connecting an antenna.

TCL’s full-size remote control features a backlit keypad. The layout is uncluttered, and includes three direct access buttons for apps (Netflix, Prime Video, and YouTube) plus an input select button, and a Free TV button that takes you to the TV’s free and ad-supported streaming channels portal.

TCL QM9K review: Smart TV and menus

Image 1 of 2

The QM9K’s Google TV smart interface with the Gemini AI icon,(Image credit: Future)Google TV’s Live TV grid guide(Image credit: Future)

- Google TV with Gemini AI

- Live program grid with broadcast channels

- Quick menu for basic adjustments

The QM9K is notable for being the first Google TV with Gemini AI chatbot support. This feature lets you essentially carry on a conversation with the TV using either the set’s built-in far-field mic or the remote control’s mic.

Using Gemini, you can ask a question like, “Show me a list of Samurai movies from the 1960s to the present.” The contextual nature of Gemini search lets you then ask follow-up questions to drill down deeper, such as “Show me the ones with an 80% or higher Rotten Tomatoes score that are available on HBO Max or Netflix.”

Gemini has much more in its bag of tricks: You can ask about anything you want, from questions about astronomy or astrology to recipes to top attractions to see when visiting cities. It can create news briefs with video links and also generate images from prompts (“Fantasy twilight landscape with white deer”) to use as screensavers.

The QM9K features a built-in presence sensor, and it can be configured to activate your AI-generated screensavers, or even a rotating photo gallery drawn from a Google Photos account, automatically when you enter the room.

A Live TV portal in Google TV provides a grid guide of broadcasts pulled in by the QM9K’s ATSC 3.0 tuner, including NextGen channels, and these are displayed along with Google TV Freeplay and TCL free ad-supported TV channels. Google TV gives you multiple options to sort these, including by genre (Reality TV, News, etc.) or antenna-only.

Both AirPlay and Chromecast built-in are supported by the QM9K, which also works with Alexa, Google Assistant and Apple HomeKit.

The QM9K’s quick menu lets you easily adjust basic settings (Image credit: Future)

There are extensive settings in the QM9K’s menus to satisfy picture and sound tweakers. The Brightness section of the Picture menu provides multiple gamma settings and contrast adjustments. For sound, there are various audio presets, including a custom Audio by Bang & Olufsen Beosonic adjustment that lets you EQ the sound based on parameters like Bright, Relaxed, Energetic, and Warm.

Accessing these settings is done by pressing the gear icon on the remote control or by clicking the same icon in the Google TV home screen. Another option is to press the quick menu icon on the remote, which calls up a menu at the screen’s bottom with a range of picture and sound setup options.

- Smart TV & menus score: 4.5/5

TCL QM9K review: Gaming

The QM9K’s Game Bar menu (Image credit: Future)

- Two HDMI 2.1 ports with 4K 144Hz support

- FreeSync Premium Pro and Game Accelerator 288

- 12.9ms input lag is average

Gaming features on the QM9K include two HDMI 2.1 ports with 4K 144Hz support, FreeSync Premium Pro, ALLM, and Dolby Vision gaming. There is also a Game Accelerator 288 feature that lets you game at 288Hz in 108p resolution and a Game Bar onscreen overlay for making quick adjustments to gaming-related settings.

The QM9K’s bright, contrast-rich picture makes all manner of games look great. Performance is also responsive, with the TCL measuring at 12.9ms when tested with a Leo Bodnar 4K input lag meter.

TCL QM9K review: Value

The QM9K’s full-size, fully backlit remote control (Image credit: Future)

- Priced higher than top mini-LED competition

- Less extensive gaming features than competition

- Google TV with Gemini enhances value

The TCL QM9K is a fantastic TV, but its raw value is taken down a notch by the fact that there is plenty of great mini-LED TV competition in 2025.

At the time of writing, TCL had already lowered the price of the 75-inch QM9K by $1,000 to $2,499. Even so, other 75-inch flagship mini-LED TVs such as the Samsung QN90F are now selling for $2,099. The QM9K has higher peak HDR brightness than the Samsung, and it also beats competitors such as the Hisense U8QG on that test. But the Samsung has superior gaming features and performance, and its great overall picture quality was one of the main reasons why it earned a five out of five stars overall rating in our Samsung QN90F review.

TCL’s own step-down mini-LED TV, the TCL QM8K, also provides very impressive picture quality, and it features the ZeroBorder screen, ultra wide viewing angle, and anti-reflection screen features found in the QM9K.

I’d have said that Google TV with Gemini, which is currently exclusive to the QM9K, was its ace-in-the-hole when it comes to value, but that feature is also coming to the QM8K at some point in 2025, and it should also be available as an upgrade for the Hisense U8QG. The QM9K is the only TV of the three with a built-in presence sensor, so that is one exclusive feature the QM9K can claim.

Should I buy the TCL QM9K?

A Gemini-generated fantasy image, used as a screensaver (Image credit: Future)Swipe to scroll horizontallyTCL QM9K

Attributes

Notes

Rating

Features

Google TV with Gemini, Dolby FlexConnect and comprehensive HDR support

4.5/5

Picture quality

Exceptional brightness and refined local dimming combined with a ultra wide viewing angle feature and anti-reflective screen make this a great all-around TV

4.5/5

Sound quality

Very good built-in sound from Audio by Bang & Olufsen Dolby Atmos speaker system

4.5/5

Design

ZeroBorder screen minimizes bezel for all picture look. Adjustable height pedestal stand can clear space for a soundbar

4/5

Smart TV and menus

Google TV is enhanced by Gemini AI, plus the quick menu provides easy access to basic settings

4.5/5

Gaming

4K 144Hz and FreeSync Premium Pro supported, but only on two HDMI ports

4/5

Value

A bit pricier than most of the current flagship mini-LED TV competition. Step-down QM8K model is a better overall value

4/5

Buy it if…

Don’t buy it if…

TCL QM9K: Also consider…

Swipe to scroll horizontallyHeader Cell – Column 0

TCL QM9K

Samsung QN90F

TCL QM8K

Hisense U8QG

Price (65-inch)

$2,999

$2,499.99

$2,499.99

$2,199

Screen type

QLED w/ mini-LED

QLED w/ mini-LED

QLED w/ mini-LED

QLED w/ mini-LED

Refresh rate

144Hz

165Hz

144Hz

165Hz

HDR support

DolbyVision/HDR10+/HDR10/HLG

HDR10+/HDR10/HLG

DolbyVision/HDR10+/HDR10/HLG

DolbyVision/HDR10+/HDR10/HLG

Smart TV

Google TV (with Gemini)

Tizen

Google TV

Google TV

HDMI ports

4 (2x HDMI 2.1)

4x HDMI 2.1

4 (2x HDMI 2.1)

3x HDMI 2.1

How I tested the TCL QM9K

Measuring a 10% HDR white window pattern during testing (Image credit: Future)

- I spent about 15 viewing hours in total measuring and evaluating

- Measurements were made using Calman color calibration software

- A full calibration was made before proceeding with subjective tests

When I test TVs, I first spend a few days or even weeks using it for casual viewing to assess the out-of-the-box picture presets and get familiar with its smart TV menu and picture adjustments.

I next select the most accurate preset (usually Filmmaker Mode, Movie or Cinema) and measure grayscale and color accuracy using Portrait Displays’ Calman color calibration software. The resulting measurements provide Delta-E values (the margin of error between the test pattern source and what’s shown on-screen) for each category, and allow for an assessment of the TV’s overall accuracy.

Along with those tests, I make measurements of peak light output (recorded in nits) for both standard high-definition and 4K high dynamic range using 10% and 100% white window patterns. Coverage of DCI-P3 and BT.2020 color space is also measured, with the results providing a sense of how faithfully the TV can render the extended color range in ultra high-definition sources – you can read more about this process in our guide to how we test TVs at TechRadar.

For the TCL QM9K, I used the Calman ISF workflow, along with the TV’s advanced picture menu settings, to calibrate the image for best accuracy. I also watched a range of reference scenes on 4K Blu-ray discs to assess the TV’s performance, along with 4K HDR shows streamed from Max, Netflix, and other services.

TCL QM9K QD-Mini LED 4K TV (2025): Price Comparison