In brief

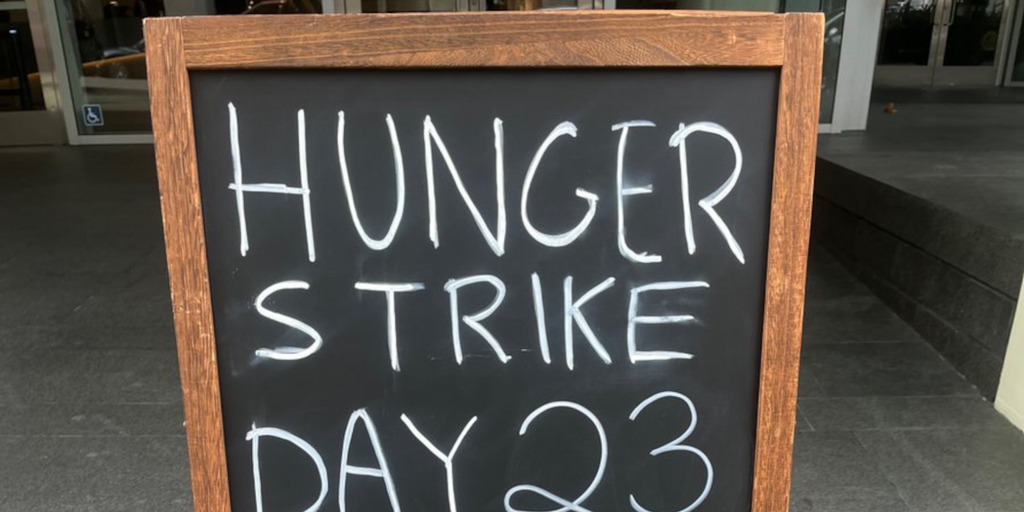

Hi, it’s Guido on hunger strike Day 23 outside the offices of Anthropic, going strong.

There is a great and profound evil in this place.

Each day, I sit and watch at lunch time and dinner time as delivery drivers on scooters and bikes stop at the curb and bring packages of… pic.twitter.com/vnYEGvzPOv

— Guido Reichstadter (@wolflovesmelon) September 25, 2025

Hi, my name’s Michaël Trazzi, and I’m outside the offices of the AI company Google DeepMind right now because we are in an emergency.

I am here in support of Guido Reichstadter, who is also on hunger strike in front of the office of the AI company Anthropic.

DeepMind, Anthropic… https://t.co/RJQCGxwTPY pic.twitter.com/KsCeVkcky8

— Michaël (in London) Trazzi (@MichaelTrazzi) September 5, 2025

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.